AMD year-end show: MI300 challenges Nvidia head-on, Lisa Su first talked about the three strategies of AI

发布时间 : 2023-12-18

On December 6, local time, at the AMD Advancing AI event held in SAN Jose, AMD CEO Lisa Su released heavy news, which drew an exclamation point for the strong year-end performance: the long-rumored MI300 was released; Finally delivering on the promise of Class E APU; Significant progress has been made in the area of ROCm software, and the weak points of hard and rigid CUDA continue to be strengthened.

With the rapid development of generative AI, the global demand for high-performance computing is increasing, further promoting the fierce competition of AI chips. Large cloud vendors led by Microsoft and Meta are also seeking to develop their own AI chips or support new suppliers to reduce their dependence on Nvidia. After the launch of AMD MI300, Microsoft and Meta were among the first customers.

MI300 two series: MI300X large GPU, MI300A data center APU

In the field of AI chips where Nvidia dominates, AMD is one of the few high-end GPU companies with AI that can be trained and deployed, and the industry is positioning it as a reliable alternative to generative AI and large-scale AI systems. One of AMD's strategies to compete with Nvidia includes the powerful MI300 series of acceleration chips. AMD is now directly challenging the dominance of the Nvidia H100 with more powerful Gpus and innovative CPU+GPU platforms.

Lisa Su said in her opening speech that data center acceleration chips, including Gpus, FPgas, etc., will grow at a rate of more than 50% per year in the next four years, from 30 billion market size in 2023 to more than 150 billion in 2027. In all her years in the business, she says, the pace of innovation is faster than any technology she has seen before.

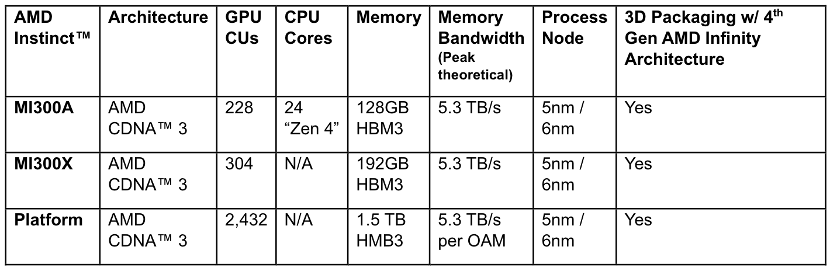

The newly released MI300 currently includes two series, the MI300X series is a large GPU with the memory bandwidth required for leading generative AI, and the training and inference performance required for large language models; The MI300A family of integrated cpus + Gpus, based on the latest CDNA 3 architecture and Zen 4 CPU, can deliver breakthrough performance for HPC and AI workloads. There is no doubt that the MI300 is not only a new generation of AI-accelerated chips, but also AMD's vision for the next generation of high-performance computing.

In the field of generative AI, the MI300X challenges Nvidia head-on

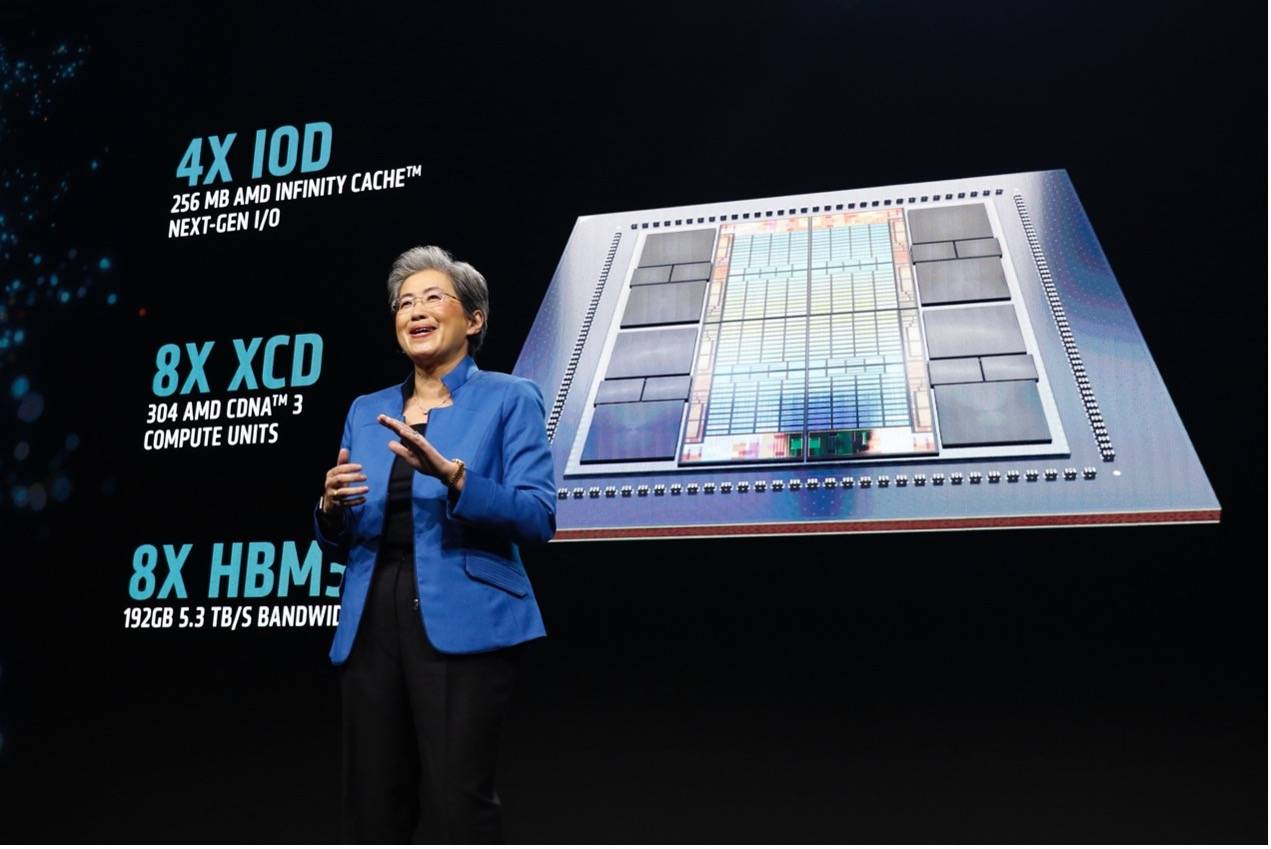

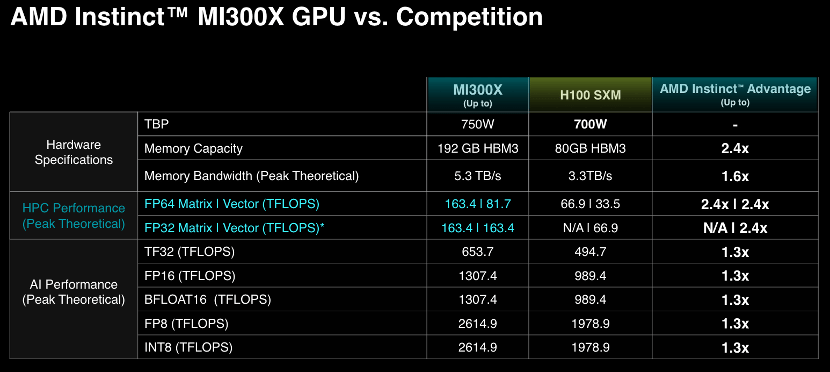

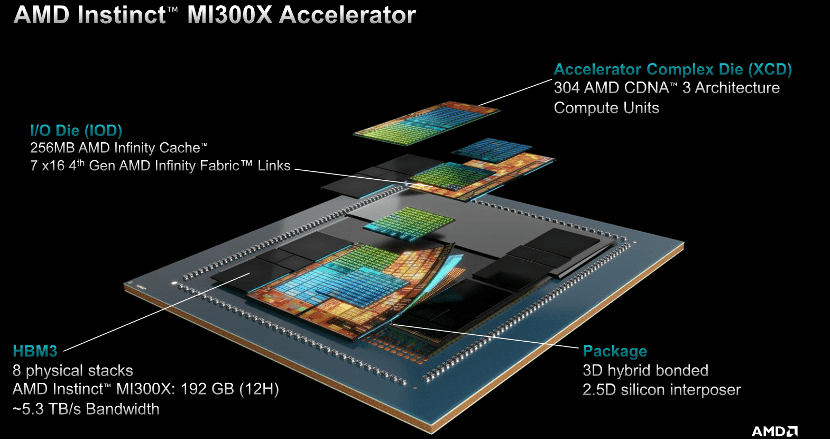

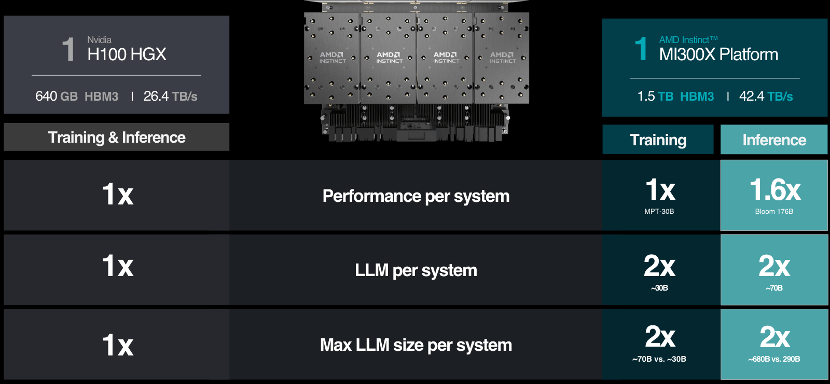

AMD MI300X has up to 8 XCD cores, 304 CU units, 8 HBM3 cores, memory capacity up to 192GB, which is 2.4 times that of Nvidia H100 (80GB), and HBM memory bandwidth up to 5.3TB/s. Infinity Fabric bus bandwidth 896GB/s. The advantage of having a lot of onboard memory is that fewer Gpus are needed to run large models in memory, eliminating the power and hardware costs of crossing more Gpus.

According to Su, generative AI is the most demanding workload ever, requiring thousands of accelerators to train and perfect models with billions of parameters. Its "law" is very simple, the more calculations you have, the more powerful the model, and the faster it generates answers.

"Gpus are at the center of the generative AI world. Everyone I talk to says the availability and power of GPU computing is the single most important factor in AI adoption, "she says excitedly," The MI300X is our most advanced product to date and the most advanced AI-accelerated chip in the industry."

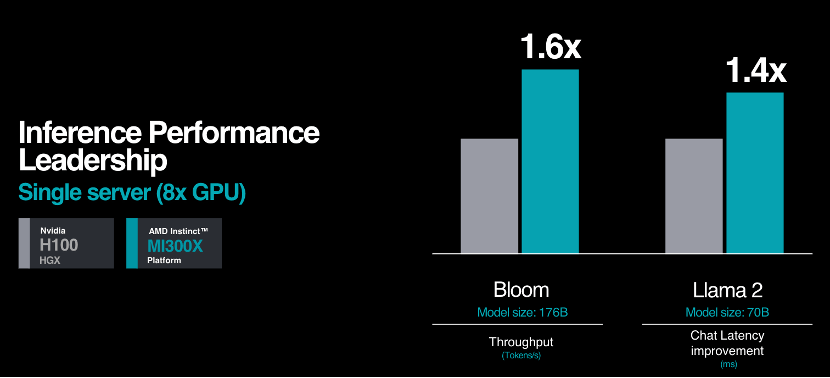

The Instinct platform is a generative AI platform designed based on the OCP standard and includes eight MI300X that can deliver 1.5TB HBM3 memory capacity. Compared to the NVIDIA H100 HGX, the AMD Instinct platform can deliver higher throughput, such as up to 1.6x performance improvement when running LLM reasoning such as 176B BLOOM, and is the only scheme on the market today capable of reasoning against 70B parameter models such as Llama2.

In addition, AMD announced a partnership with Microsoft Azure to integrate cutting-edge AI hardware into leading cloud platforms, which also marks the ongoing progress of AI applications in the current industry. The introduction of AMD Instinct MI300X in the Azure ecosystem further strengthens AMD's market position in cloud AI.

Data Center MI300A - Class E APU becomes a reality

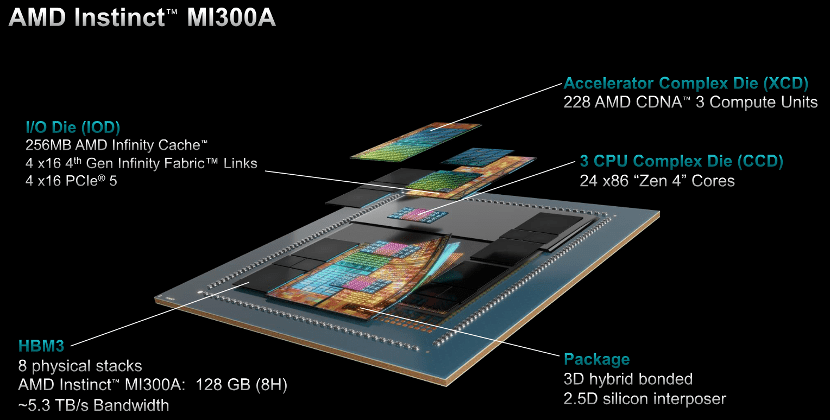

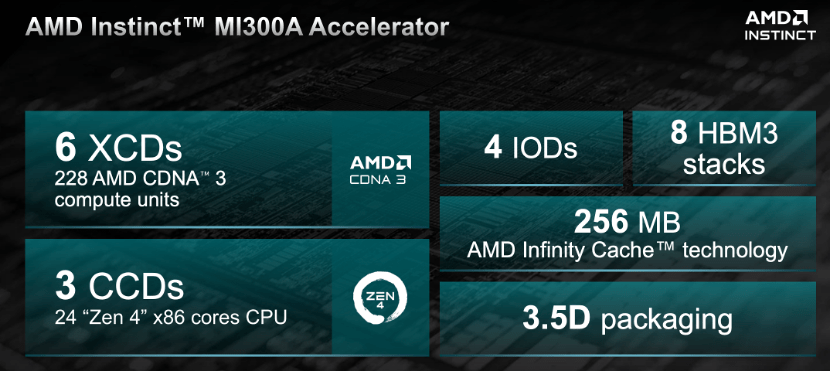

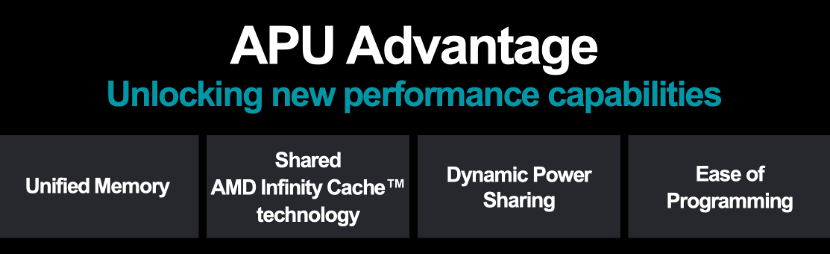

The MI300A is the world's first data center APU for HPC and AI, combining a CDNA 3 GPU core, the latest AMD "Zen 4" x86-based CPU core, and 128GB of HBM3 memory through 3D packaging and fourth-generation AMD Infinity architecture. Delivers the performance required for HPC and AI workloads. Compared to the previous generation AMD Instinct MI250X5, running HPC and AI workloads, the FP32 delivers 1.9 times performance per watt.

Energy efficiency is critical for HPC and AI because these applications are filled with extremely data - and resource-intensive workloads. The MI300A APU integrates the CPU and GPU cores in a single package, providing an efficient platform while also delivering the training performance needed to accelerate the latest AI models. Within AMD, the innovation goal for energy efficiency is positioned at 30×25, that is, 2020-2025, to improve the energy efficiency of server processors and AI accelerators by 30 times.

Compared to the MI300X's eight XCD cores, the MI300A uses six XCDS to make room for the CCD. The advantage of the APU means that it has a unified shared memory and cache resources, thus eliminating the need to copy data back and forth between the CPU and GPU. When switching power, it only needs to run the GPU and run the serial part on the CPU, which performs better because it eliminates the process of copying data. It also provides customers with an easy-to-program GPU platform, high-performance computing, fast AI training capabilities and good energy efficiency to power demanding HPC and AI workloads.

The new generation of 2ExaFLOPS (20 billion billion times) El Capitan supercomputer will be powered by AMD MI300A, which will make El Capitan the fastest supercomputer in the world.

AI open source software strategy continues to strengthen

AMD has released its latest ROCm 6 Open software platform and is AMD's commitment to contributing the most advanced libraries to the open source community. Su said ROCm 6 represents a major leap forward for AMD software tools, further advancing AMD's vision of open source AI software development. The software platform enhances AMD's AI-accelerated performance, delivering approximately eight times better performance compared to previous generation hardware and software when running Llama 2 text generation on MI300 series accelerators.

ROCm 6 adds key features for generative AI, including FlashAttention, HIPGraph, and vLLM. AMD is collaborating with software partners, such as PyTorch, TensorFlow, hugging face, etc., to build a robust AI ecosystem that simplifies the deployment of AMD AI solutions.

In addition, AMD has further enhanced its ability to provide AI customers with open software through the acquisition of open source software company Nod.AI, enabling them to easily deploy high-performance AI models adapted for AMD hardware. And continue to strengthen its open source AI software strategy by partnering with the Mipsology ecosystem.

AMD is committed to building an open software ecosystem, leveraging a broad open source community base to accelerate the pace of innovation while providing customers with more flexibility. It has to be said that against Nvidia's strong CUDA ecosystem in the AI field, this move is a key link for AMD to build itself into a strong AI competitor, and also helps AMD cut into the existing CUDA market.

Three generations of product evolution, Lisa first talked about the three AI strategies

Not long ago, when AMD announced its Q3 2023 financial report, it is expected that data center GPU revenue will exceed $2 billion by 2024, achieving substantial growth. At the time, this accelerated revenue forecast was largely predicated on the ability of AI-centric solutions to cater to a wide variety of AI workloads across industries, which is one reason why the newly launched MI300 received a lot of attention from the industry.

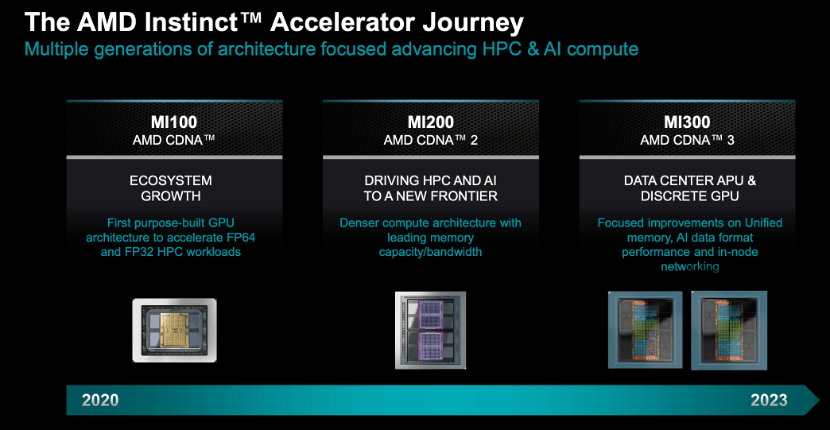

AMD's accelerated chip journey, which began with the first generation of the MI100 based on CDNA computing architecture, paved the way for the broader commercial success of the MI200 through several HPC applications and deployments. The MI200 has been in mass production for a few years now, and it has been successful in large HPC and cutting-edge AI deployment applications, and it is number one on the Top 500 list of supercomputers.

It is also after the polishing of these two generations of products that AMD really has a deeper understanding of AI workloads and software requirements, laying a solid foundation for the MI300. Currently, the MI300A is focused on HPC and AI applications and is the world's first data center APU. The MI300X is specifically designed for generative AI, and through hardware and software improvements, the application barrier of the product is further lowered.

At the conference, Lisa Su talked about AMD's three major AI strategies for the first time: First, AMD has always provided universal, high-performance, energy-efficient Gpus, cpus, and computing solutions for AI training and reasoning; Second, it will continue to expand its open, mature, and developer-friendly software platform so that leading AI frameworks, libraries, and models are fully supported on AMD hardware. Third, AMD will expand co-innovation with partners, including cloud providers, Oems, software developers, and more, to enable further AI-accelerated innovation.