How big is the AI server market? Here's an opportunity for passive components

发布时间 : 2024-01-05

Recently, there was news that Ali Cloud A100 server business was suspended for rental, which means that domestic NVIDIA computing power will face a shortage. According to a research report released by Guosheng Securities, as the difficulty of getting the card has increased, the computing power operator has begun to "grab" the H800 server, and the price has surged to 3 million/set. Both of these news imply that the price increase of the computing power rental industry is coming, and the phenomenon of AI server snatching orders is also getting stronger.

AI servers have ushered in a wave of singles.

"Group mode melee" leads to AI server demand

According to industry insiders, the demand for AI servers this year has exceeded expectations, mainly due to the development of major models. Data show that since the launch of ChatGPT by Open AI, major domestic manufacturers have accelerated their pace, including not only Baidu's Wenxin Word, IFly.com's Spark and Huawei's Pangu and other Internet companies actively develop language models, but also major terminal markets actively access large models. It is this "group mode war" that ignited the demand for AI servers.

According to TrendForce's forecast, global AI server shipments are expected to reach 1.183 million units in 2023, an increase of 38.4% year-on-year. By 2025, that number is expected to climb to nearly 1.895 million units, a compound annual growth rate of 41.2%.

In terms of market pattern, the share of AI servers is relatively concentrated, and Inspur information occupies 20.2% of the global market share, ranking first, and other domestic head manufacturers include Zhongko Shuguang, Tope Information, Digital China and Unigroup shares.

AI server core components

The core chip components of AI servers mainly include cpus, Gpus, NPU, ASics, FPGas and other accelerators, as well as memory, storage, network and other accessories.

Compared with traditional central processing units (cpus), Gpus have more computing cores and faster memory bandwidth, which greatly improves computing efficiency and graphics rendering speed.

At the current stage, with the release of Nvidia A100, H100 and other models of products, GPU has significant advantages in computing power, and gradually shifted from the initial graphics processing to computing tasks.

Because GPU has the strongest computing power and deep learning capabilities, it has become the most suitable hardware to support artificial intelligence training and learning, and has become the first choice for acceleration chips in servers.

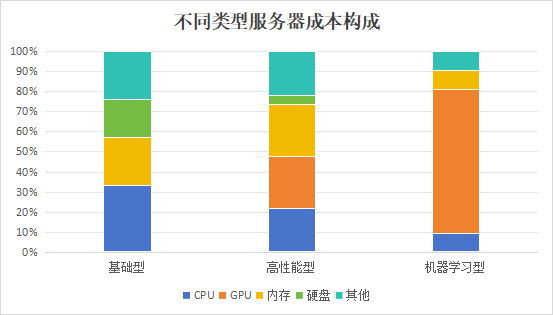

According to IDC, chips used for computing and storage account for about 70% of the server cost structure, with Gpus accounting for 72.8% of the cost in machine learning servers.

AI server

Data source: Bank Research Center, Oriental Securities Research Institute tabulation: "Semiconductor Device Application"

According to statistics, according to the number of Gpus, AI servers are further divided into four, eight and sixteen servers, and eight-way AI servers equipped with eight Gpus are the most common.

In the current domestic secondary market, there are mainly companies such as Cambrian, Haiguang Information and Jingjiawei layout GPU chips.

Passive components should not be ignored

In addition to accelerators such as Gpus, the role of passive components in AI servers such as resistors, capacitors and inductors in the entire system cannot be ignored. These passive devices have excellent characteristics, provide more stable voltage and current, ensure the normal operation of the AI server and fast transient response and low ripple.

In addition, low-loss passive devices can also improve the conversion energy efficiency of AI servers, thereby improving critical efficiency and achieving energy conservation and environmental protection. Inductance also plays an important role in the reliability and stability of AI servers, putting a higher demand on it.

Write at the end:

With the continued development of large models, the AI server market will continue to show a continuous upward trend in volume and price. This trend brings card advantages to all aspects of the server industry chain, including key areas such as computing chips, server foundry, memory, and passive components. Companies in these areas are expected to usher in a full explosion of opportunities.

In the field of computing chips, the continuous introduction of more efficient and powerful GPU and CPU chips will become the key point of competition. Chip manufacturers can meet the demand for higher performance and lower power consumption through continuous innovation and technological upgrading.

Server foundries have the opportunity to meet market demand by providing high-quality, efficient foundry services. At the same time, they can also establish close partnerships with chip manufacturers to jointly promote the development of the AI server market.

In addition, memory suppliers can increase research and development investment, introduce higher capacity, higher speed memory products to meet the growth of AI servers for storage demand.

In the field of passive components, suppliers of high-quality resistors, capacitors and inductors can gain a competitive advantage in the market by providing products with better stability and performance.